Is Your Job Related Testing Effective?

Donald J. Ford, Ph.D

Has your Job Related Testing been effectively compiled and constructed? A client of mine recently asked me to review a couple of tests that they use to verify the proficiency of their technical workers after training. Initially, they were concerned that some of the wording and answer choices were confusing to students and might be preventing them from passing. When I looked at the tests, I soon realized they had bigger problems than language and formatting. At face value, the test items were a jumble of different types all mixed up together. Multiple choice, True/False, Fill in the Blank, and Matching test items were randomly scattered throughout the 40 item test. Digging a bit deeper, I found many of the items were indeed poorly written, rendering them either incomprehensible or dead giveaways.

As I began to edit the worst items to make them easier to understand, a nagging question kept popping up in the back of my mind: where did these items come from? I went back to the instructors of the course, all technical SMEs, and asked them my nagging question. The answers I got were unsettling. Most of the instructors admitted that they had simply written their test items off the top of their heads, using knowledge they had long-ago acquired on the job. When I asked them to show me where the knowledge had been taught in the course, most were unable to point to any specific piece of curriculum, instead claiming it had been “covered” sometime during the course, but not knowing exactly when. When I met again with the sponsor and project manager, I pointed out that they actually had a much larger test problem than poor wording. They were using the test results to make personnel decisions about who got an entry-level position with the company. Failure to pass the test meant failure to get a permanent position with the firm. Given the high stakes involved, I informed my client that they needed to demonstrate that the test was directly job-related in order to use it to make personnel decisions. When the client asked me for my source on this, I replied, “The United States Supreme Court.” I went on to explain that the court has ruled repeatedly that any test used to make employment decisions must be job-related. Furthermore, courts have ruled that management has the responsibility to demonstrate this fact prior to using a test. My client sighed with concern. “How do we demonstrate that our tests are job-related?” I proceeded to explain the various ways to demonstrate test validity – the process for determining if job related testing is valid for the purpose it is intended. After going through a litany of validities – face, content, construct, criterion – I suggested that we use content validity. It is the easiest method of those available and yet it provides strong, legal evidence of a clear link between a job and a test meant to measure proficiency to perform that job.

Clearly Identify What is Required in Job Related Testing

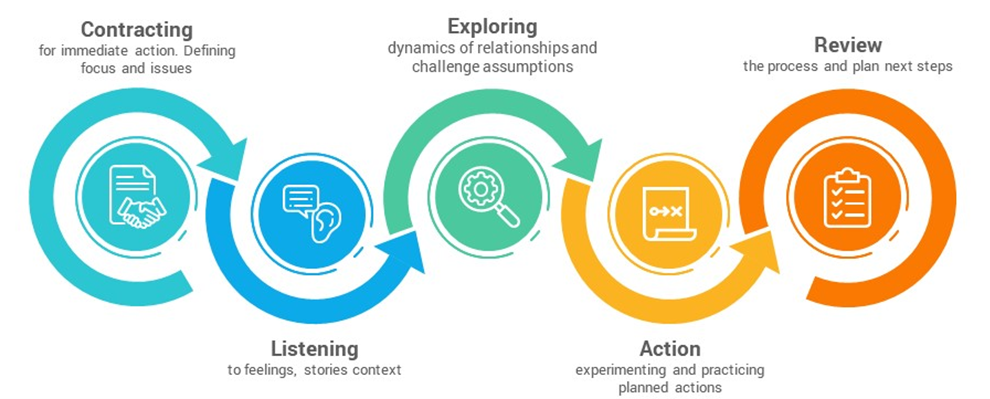

Content validity requires a clear linkage from the job through the training to Job Related Testing. This is best established by using the standard process outlined in Figure 1. It is divided into four phases, just like the instructional design process. In phase one, a detailed analysis of the job, including an updated job description and job task analysis, must be completed to clearly identify the tasks and the knowledge, skills, attitudes (KSAs) that are required. In phase two, the tasks are converted to learning objectives, along with the knowledge, skills and attitudes required to perform the tasks. The objectives form the link between the job and the training and are the basis for developing learning materials, curricula and test items. In phase three, the training is implemented and the test is administered to learners. It is very important to ensure that all content included in the final exam is taught in the class and is tied to a specific learning objective. This is how Training Departments can demonstrate that they have taught the necessary skills to perform the job. It also demonstrates to stakeholders that the exam is directly based on what was taught, which was based on what people actually do on the job. In phase four, after the tests have been administered and scored, the training department should conduct analysis of the exam’s overall performance in terms of passing rates and demonstration of learning. Next, they should conduct a detailed item analysis to determine whether all the items on the test are performing as they should. There are many software programs that can assist with test item analysis, but an expert in testing should interpret the results. If items are performing poorly, they should be edited or replaced with new items.

Of course, all of this was a lot more work than my client bargained for, but eventually he came to see that the problems they had identified with the test content and format were really just the tip of the iceberg. Lurking below the surface were much more serious problems of test validity and reliability that could have landed this employer in court, if a potential candidate who flunked out of the program chose to legally challenge the outcome. To help ease the burden, I developed a spreadsheet that captured all the necessary information in one place and allowed us to quickly identify job related testing problems and correct them. It also facilitated sharing sensitive information with a group of six instructors and allowed the team to track progress. Once the project finishes, the database will also serve as a repository for the test items and documentation of the content validity that the company conducted. Eventually, this client plans to convert testing to an online system, so the database will then be used to populate the new online testing system. Figure 2 contains the fields that make up the database.

LEGEND: Test #/Version # – Used to track multiple tests and test versions. Not necessary for a single test.

Module # – Training module where item was taught

Objective – Learning objective being tested (must appear in Reference Curriculum), based on the job task analysis

Question – The question stem for each test item Answer – The correct answer

Distractor 1, 2, etc. – Incorrect answer choices for multiple choice items. Note that you can add more columns for distractors if you need 3 or 4 of them.

Reference Curriculum – where the item was taught and what materials learners received

Reference Company – where the content of the training came from, such as company policies or industry standards

Notes – a place to capture miscellaneous information about a test item or to pose questions to reviewers

K or P – indicates whether the item tests (K)nowledge or (P)erformance of a skill. This choice may require different formats and administration for hands-on performance tests, so it is important to capture this distinction for skill-based training.

Check Out Training Education Management on Facebook!

And Feel Free to Contact Us!

Tagged as Job Related Testing